Reading Assistance for DHH Technology Workers

User-interface and text-simplification research to support DHH readers...

User-interface and text-simplification research to support DHH readers...

User-interface and computer-vision research for searching ASL dictionaries...

Video and motion-capture recordings collected from signers for linguistic research...

Enabling students learning American Sign Language (ASL) to practice independently through a tool that provides feedback automatically...

Analyzing eye-movement behaviors to automatically predict when a user is struggling to understand information content...

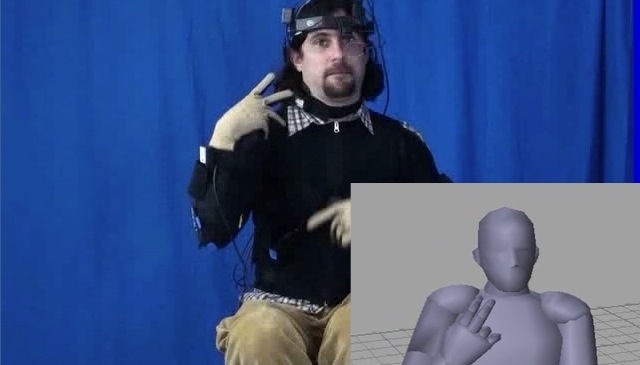

Collecting a motion-capture corpus of native ASL signers and modeling this data to produce linguistically accurate animations...

Developing technologies to automate the process of synthesizing animations of a virtual human character performing American Sign Language...

Analyzing English text automatically using computational linguistic tools to identify the difficult level of the content for users...

While there is a shortage of computing and information technology professionals in the U.S., there is underrepresentation of people who are Deaf and Hard of Hearing (DHH) in such careers. Low English reading literacy among some DHH adults can be a particular barrier to computing professions, where workers must regularly "upskill" to learn about rapidly changing technologies throughout their career.

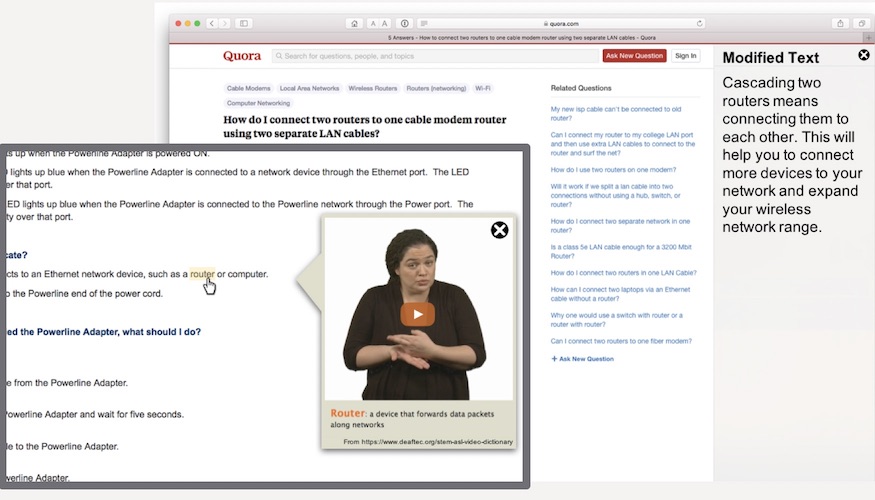

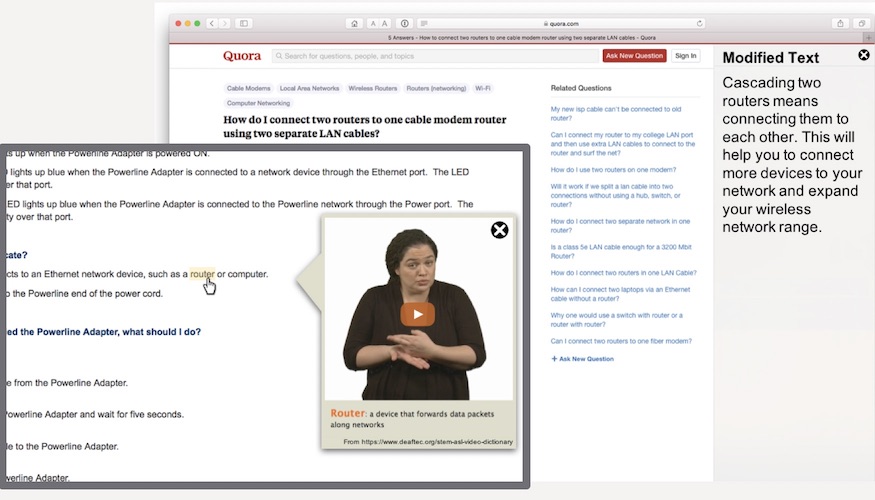

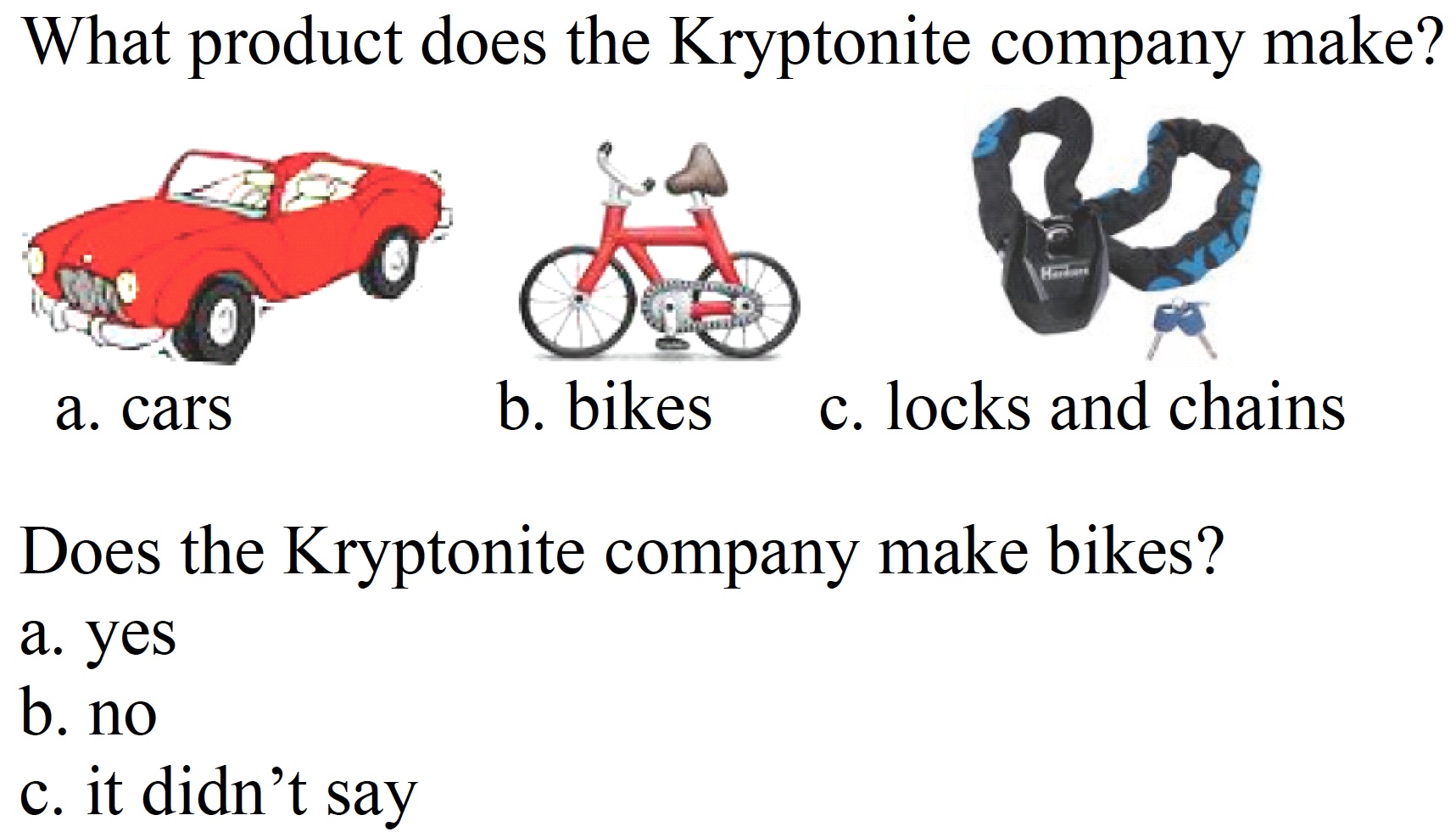

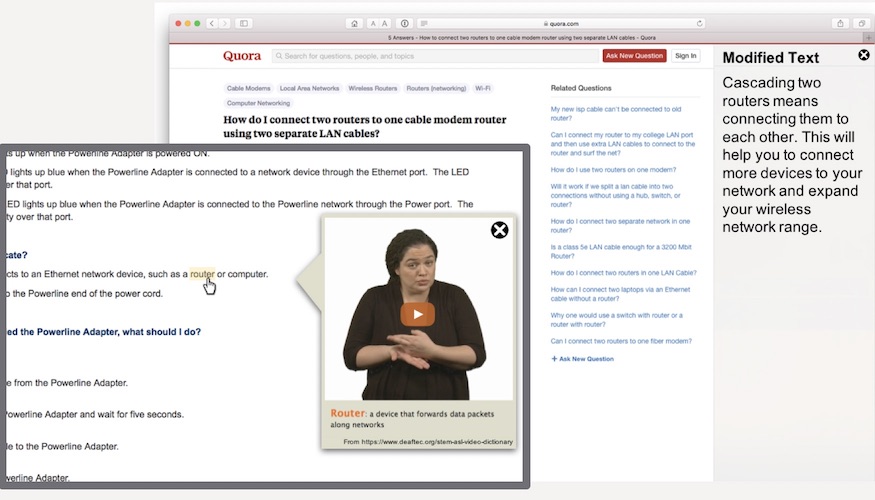

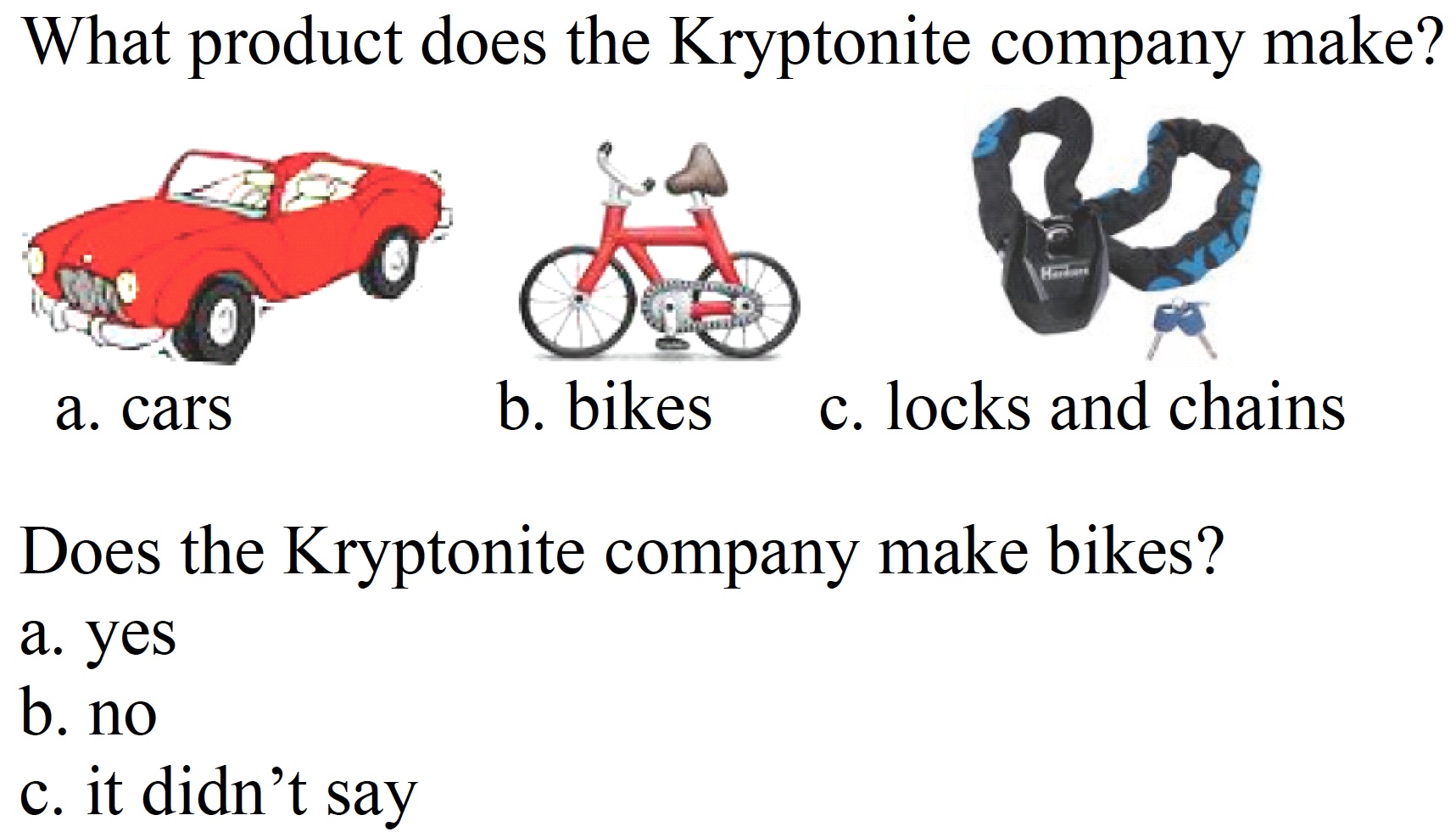

We investigate the design of a web-browser plug-in to provide automatic English text simplification (on-demand) for DHH individuals, including providing simpler synonyms or sign-language videos of complex English words or simpler English paraphrases of sentences or entire documents. By embedding this prototype for use by DHH students as they learn about new computing technologies for workplace projects, we will evaluate the efficacy of our new technologies.

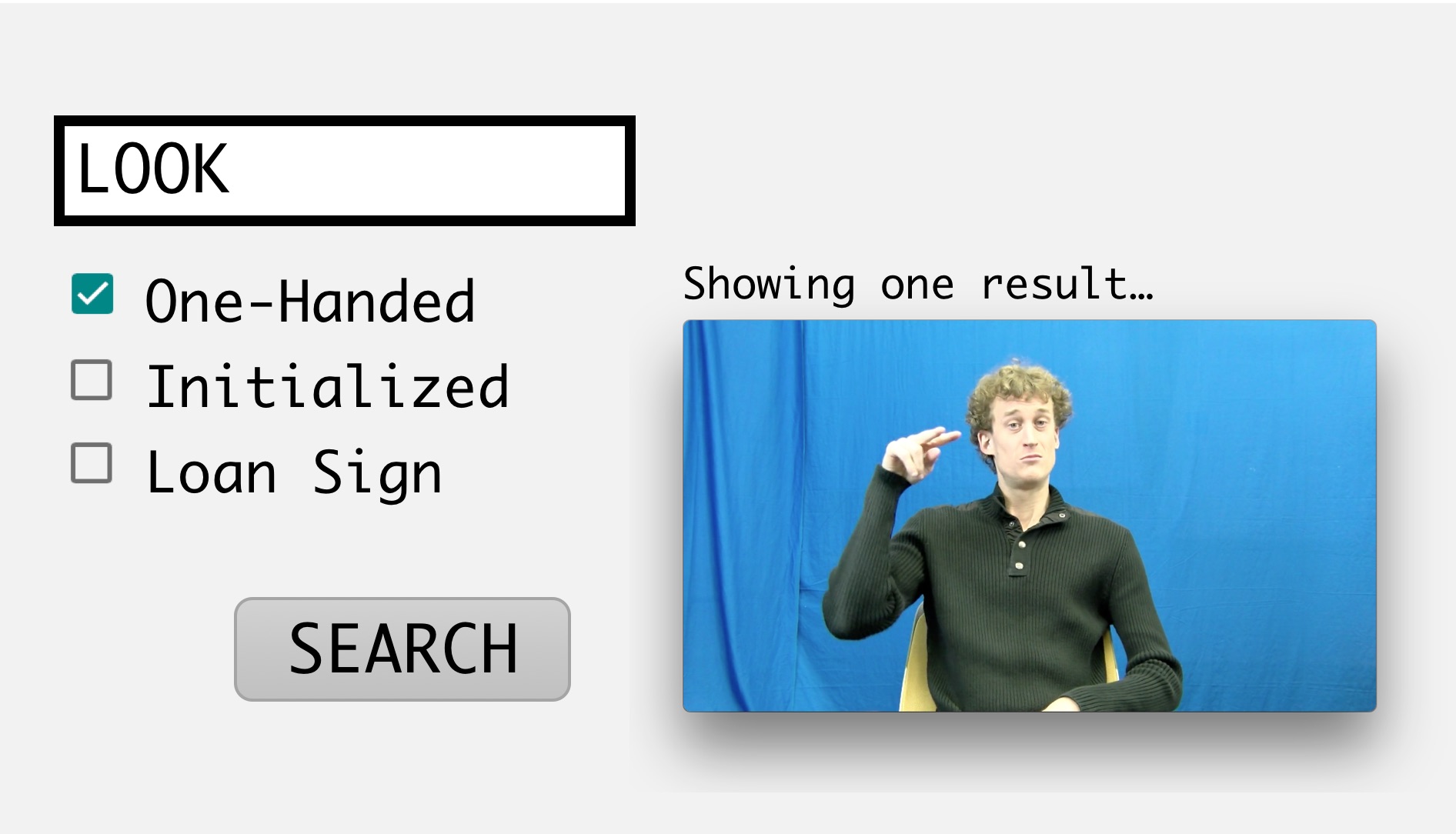

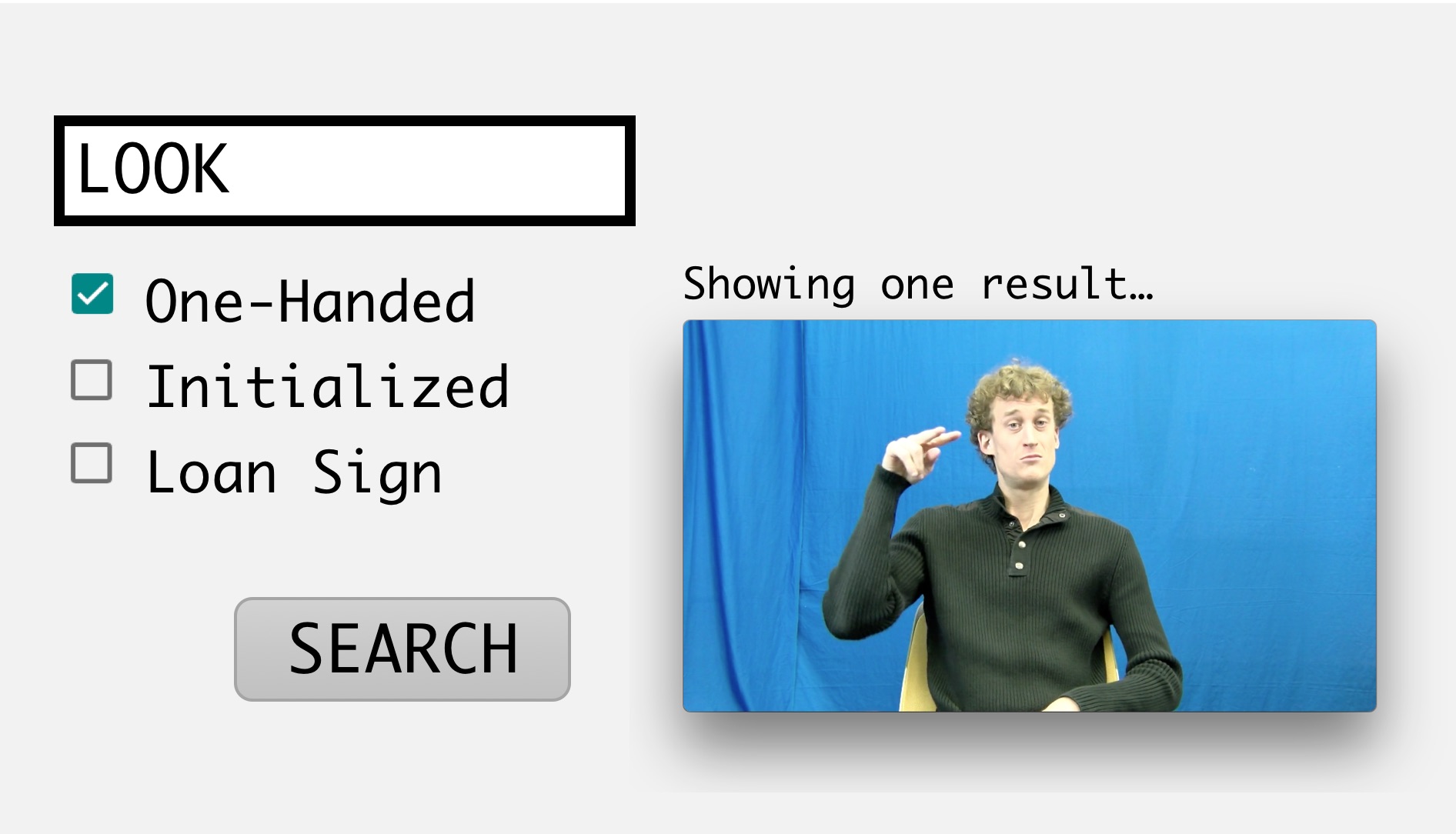

Looking up an unfamiliar word in a dictionary is a common activity in childhood or foreign-language education, yet there is no easy method for doing this in ASL. In this collaborative project, with computer-vision, linguistics, and human-computer interaction researcher, we will develop a user-friendly, video-based sign-lookup interface, for use with online ASL video dictionaries and resources, and for facilitation of ASL annotation.

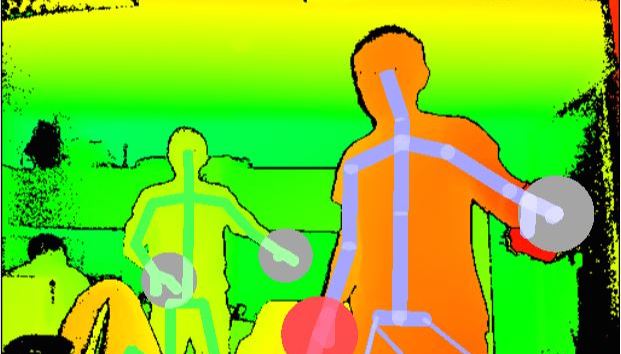

Huenerfauth and his students are collecting video and motion-capture recordings of native sign-language users, in support of linguistic research to investigate the extent to which signed languages evolved over generations to conform to the human visual and articulatory systems, and the extent to which the human visual attention system has been shaped by the use of a signed language within the lifetime of an individual signer.

Computer users may benefit from user-interfaces that can predict whether the user is struggling with a task based on an analysis of the user's eye movement behaviors. This project is investigating how to conduct precise experiments for measuring eye-tracking movements and user task performance -- relationships between these variables can be examined using machine learning techniques in order to produce preditive models for adaptive user-interfaces.

An important branch of this research has investigated whether eye-tracking technology can be used as a complementary or alternative method of evaluation for animations of sign language, by examining the eye-movements of native signers who view these animations to detect when they may be more difficult to understand.

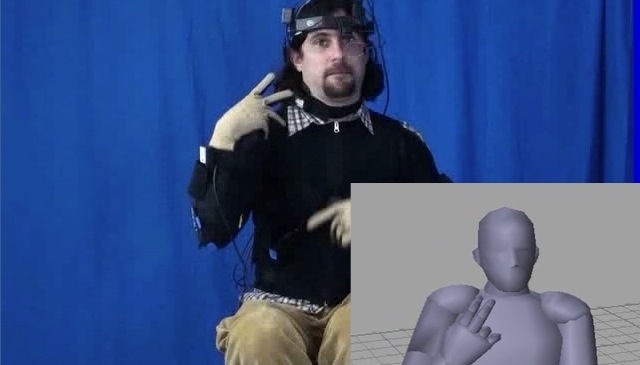

This project is investigating techniques for making use of motion-capture data collected from native ASL signers to produce linguistically accurate animations of American Sign Language. In particular, this project is focused on the use of space for pronominal reference and verb inflection/agreement.

This project also supported a summer research internship program for ASL-signing high school students, and REU supplements from the NSF have supported research experiences for visiting undergraduate students.

The motion-capture corpus of American Sign Language collected during this project is available for non-commercial use by the research community.

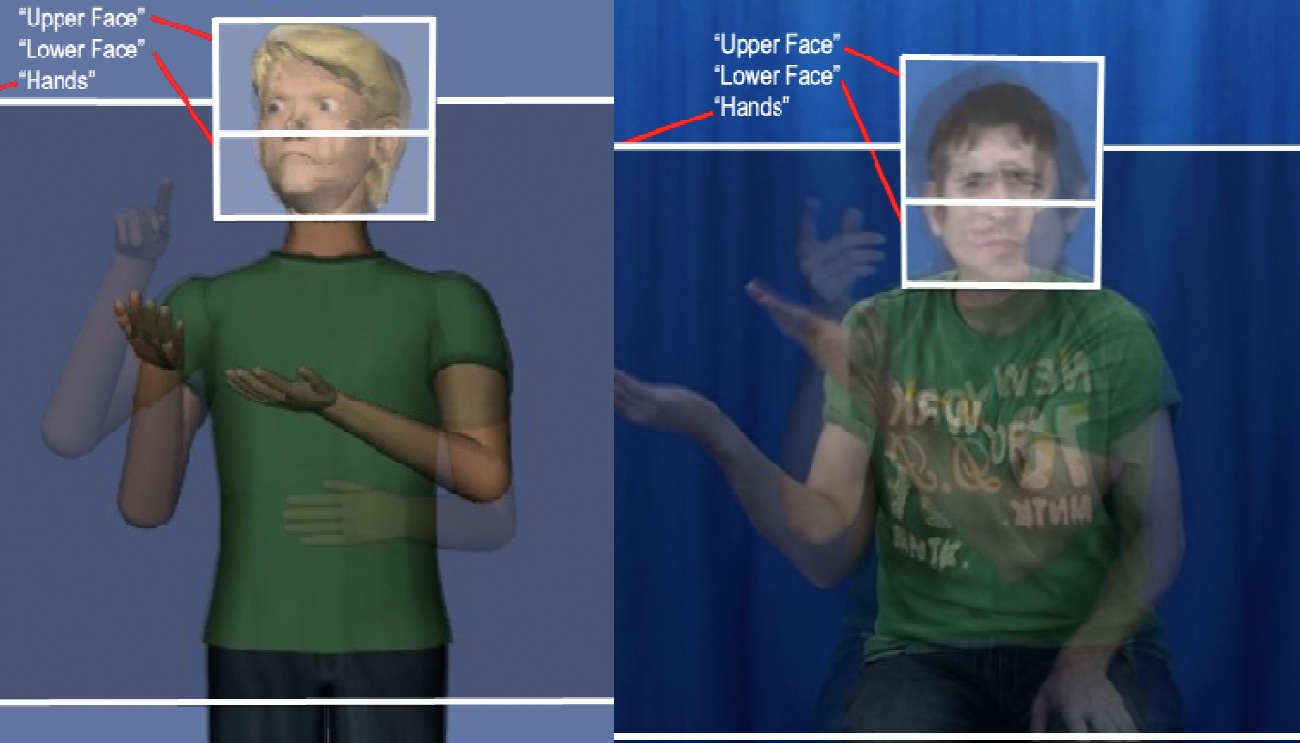

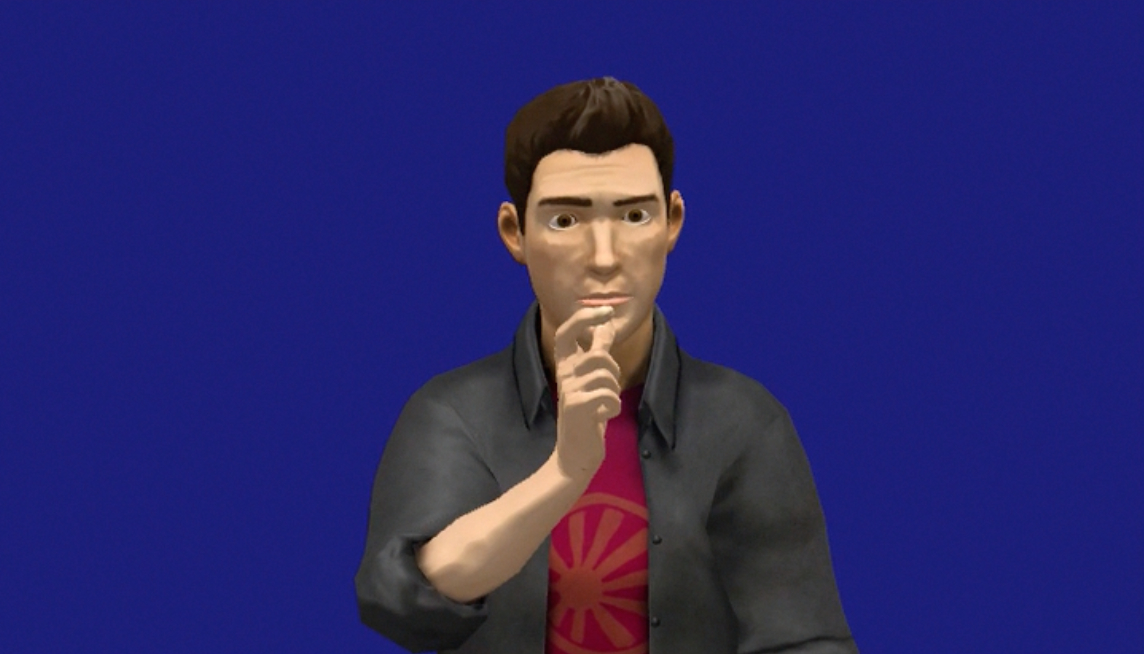

The goal of this research is to develop technologies to generate animations of a virtual human character performing American Sign Language.

The funding sources have supported various animation programming platforms that underlie research systems being developed and evaluated at the laboratory.

In current work, we are investigating how to create tools that enable researchers to build dictionaries of animations of individual signs and to efficiently assemble them to produce sentences and longer passages.

This project has investigated the use of computational linguistic technologies to identify whether textual information would meet the special needs of users with specific literacy impairments.

In research conducted prior to 2012, we investigated text-analysis tools for adults with intellectual disabilities. A state-of-the-art predictive model of readability was developed that was based on discourse, syntactic, semantic, and other linguistic features.

This research project has concluded. This project was conducted by Matt Huenerfauth and his students.

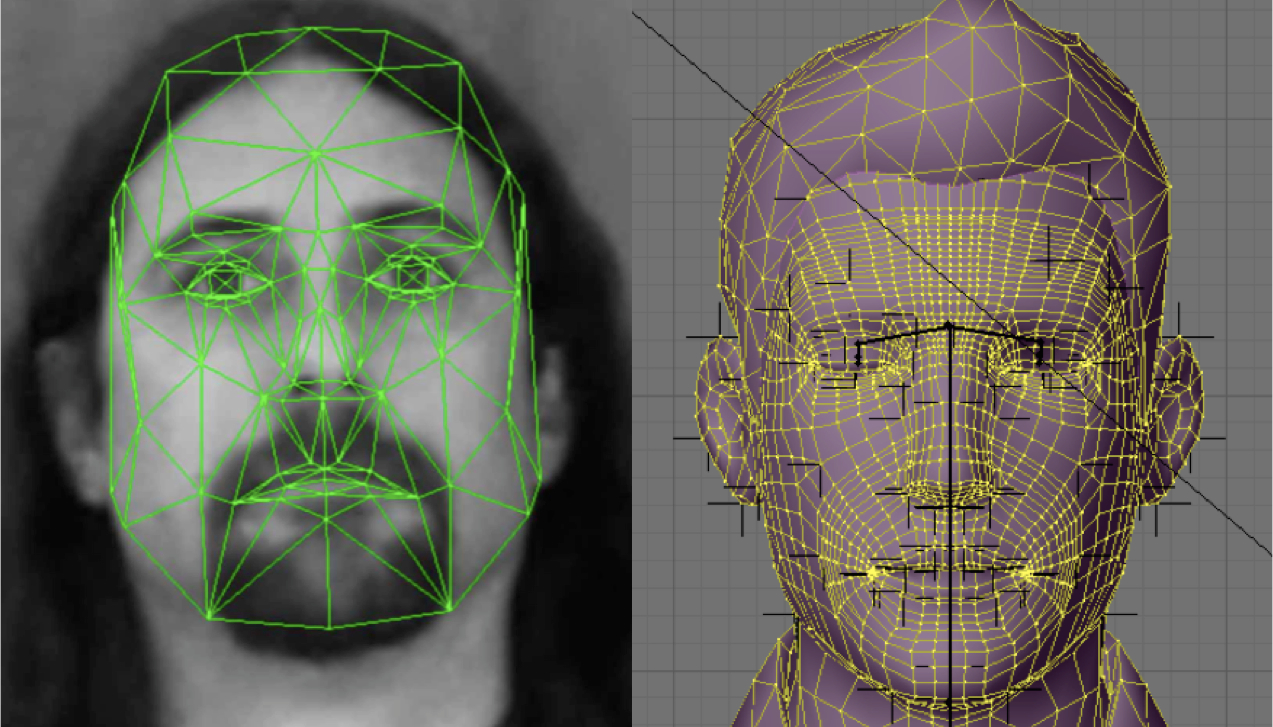

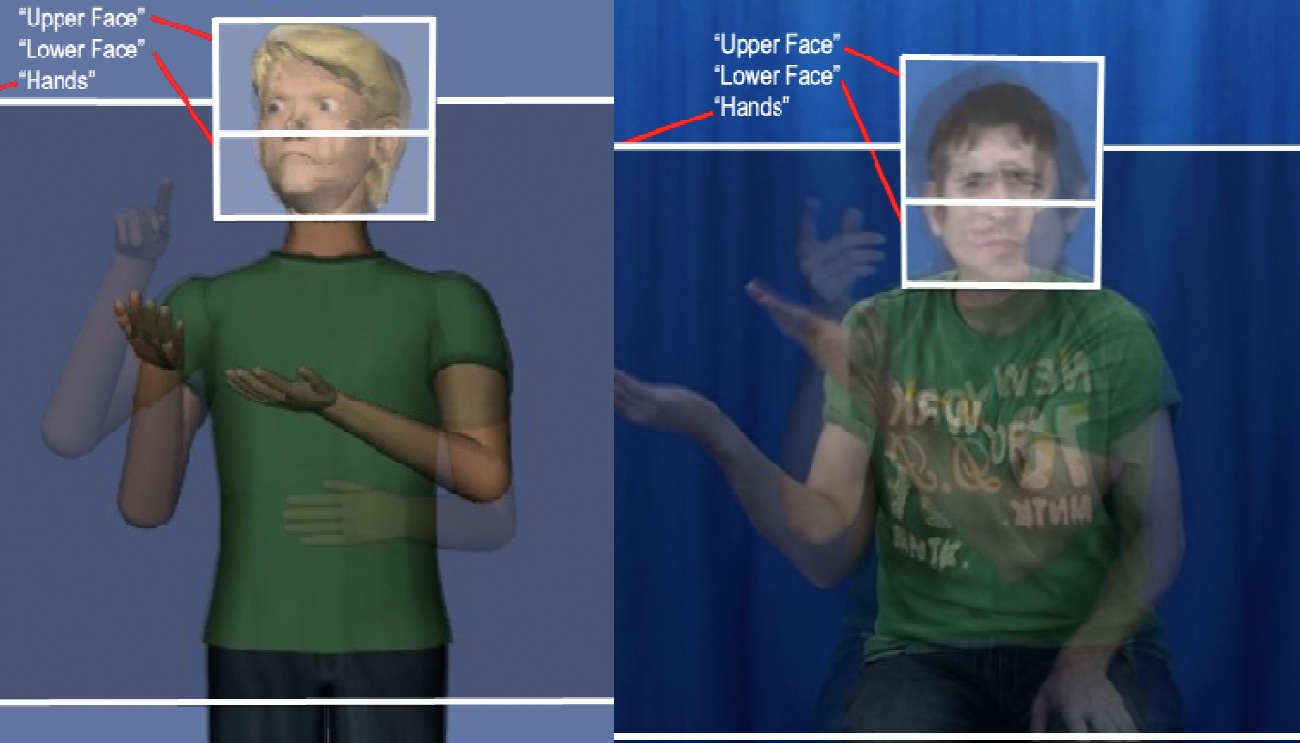

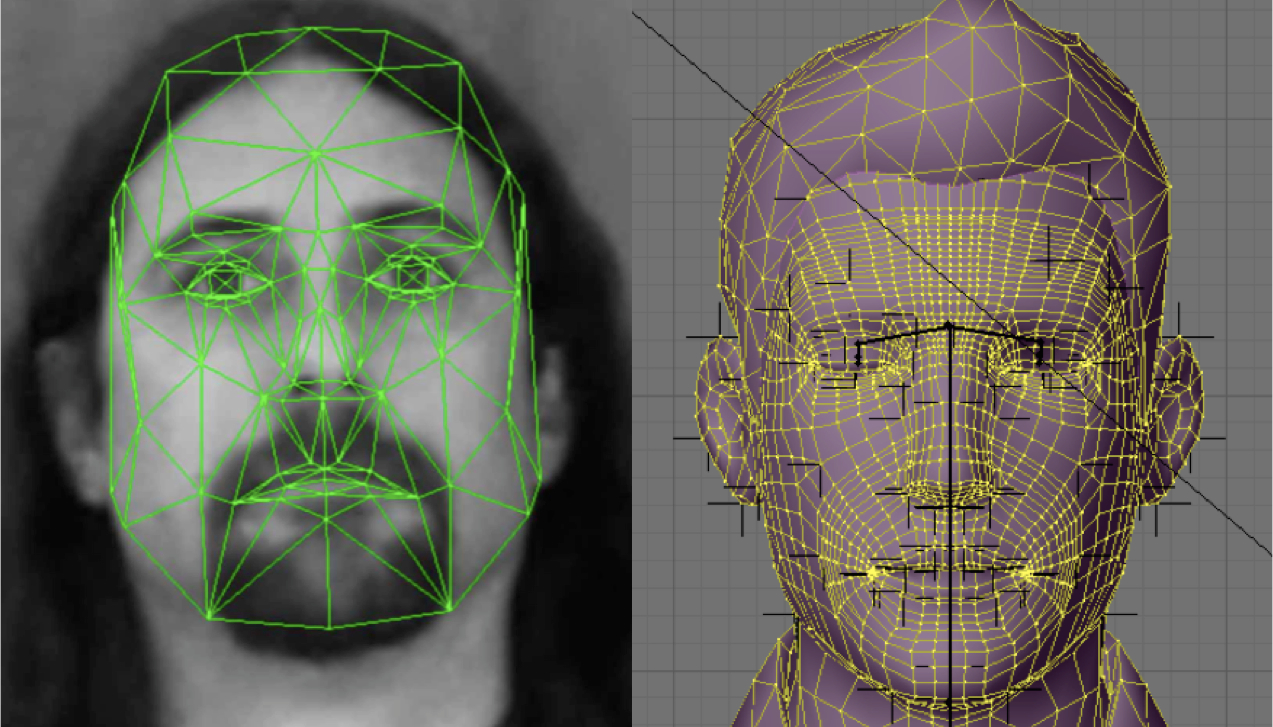

We are investigating techniques for producing linguistically accurate facial expressions for animations of American Sign Language; this would make these animations easier to understand and more effective at conveying information -- thereby improving the accessibility of online information for people who are deaf.

This research project has concluded. This project was joint work with researchers at Boston University and Rutgers University.